Historian

A data recorder for rapid archiving and quick playback

If you need to record every value in a high-speed process, a relational database like SQL Server, MySQL or Oracle might not be up to the task. The Historian feature lets your DataHub act like a flight recorder for process data, providing high speed storage and retrieval of data, capable of processing millions of transactions per second.

Historian features

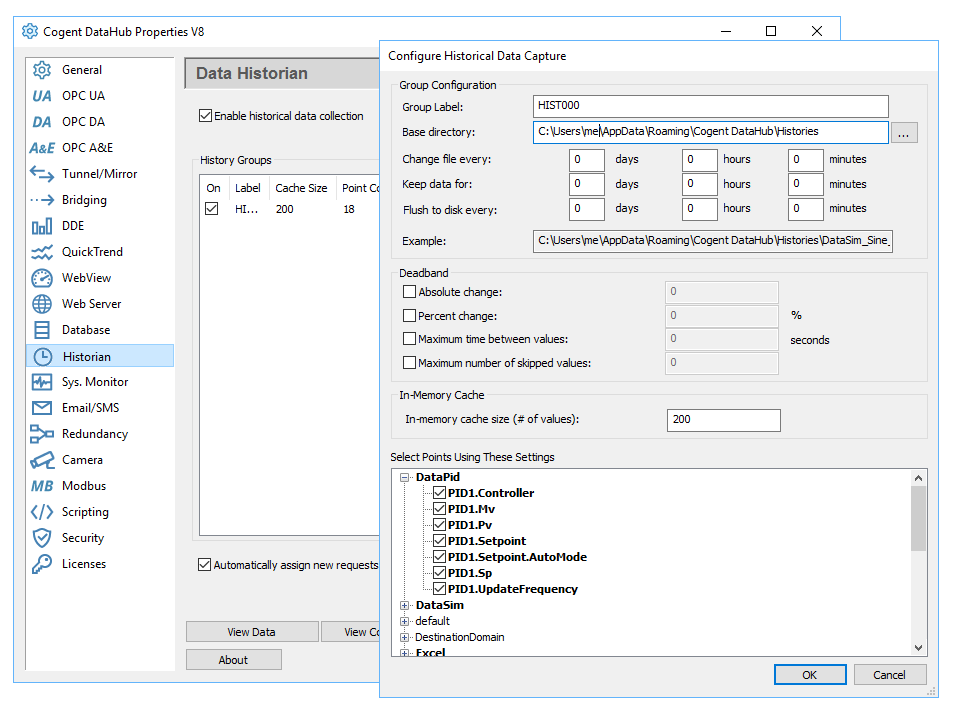

The DataHub Historian feature is a high speed, low cost historical data storage mechanism that stores very little data per value change, so it has a very small on-disk footprint. The Historian comes with a disk space management feature that allows you to specify how much data to store for each point or how long to store the data and when to change the storage file names, all of which help to optimize storage on disk. The Historian can also be set up to automatically store data point histories when they are requested by a client program. For example, if you had a client that wanted to trend specific data, then the client program can tell the Historian to start storing data for those data points so they are available should the client program want to go back in time to display historical data. No pre-configuration of the Historian feature is required.

Technical summary

- Records point name, value, quality and timestamp and makes them available for rapid access.

- Utilizes in-memory caching, to store data in memory and periodically write data to disk. This allows for very high speed queries as some queries can be fully services by the memory cache, without having to read from the disk.

- But even when reading from disk, the Historian feature is capable of reading more than 1 million values per second on standard computer hardware.

- Highly efficient storage algorithms ensure no data gets dropped.

- History files are created, stored, and accessed automatically.

- In addition to straight queries of the raw data, the Historian also includes built-in query functions capable of producing analytical information which allows you to select any time period, from a few seconds to weeks, months, or longer, and retrieve averages, percentages of good and bad quality, time correlations, regressions, standard deviations, and more.

- Access to the Historian is available through the DataHub QuickTrend and WebView applications as well as through the built-in Gamma scripting language.

- Multiple deadbanding options lets you save space by eliminating repetitive or unnecessary values.

Technical questions

What is the size of the data stored on disk?

The calculation is 18 bytes on disk per stored value. Only boolean, integer and floating point types are supported. The DataHub Historian will not store strings.

The number of stored values in a given period will depend on the rate of change of the data. The DataHub Historian does not sample the data – it stores every change. If your data point changes only 4 times in a day, then a day of data will consume 72 bytes on disk. If your data point changes every 100ms then a day of data will consume 15.5 MB.

In the case of OPC DA data, the rate of change of data can be limited by modifying the minimum update rate of the OPC connection. This will allow you to compute a worst-case scenario. Timestamps on disk are recorded to the nearest nanosecond resolution.

Does the historian compress data?

The DataHub Historian does not compress data on disk. It stores only changed values, so there will be no consecutive duplicate values in the file.

If you configure deadbanding in the historian, you can reduce the number of values saved to disk. This is the best approach to limiting the number of values on disk without missing significant data changes.

In general, the DataHub historian uses data change events to ensure that all important data is captured, and uses deadbands to filter out insignificant events. That will produce either a perfect copy of the data stream (if no deadband is set) or a high-fidelity copy of the data stream removing only the “jitter” in the input. Some other historians sample the data periodically, causing the file on disk to be much larger, and also causing the historian to miss important events that occur between samples.

How can I access the data in the Historian?

There are a few ways to access the data in the Historian:

- Using DataHub QuickTrend,

- Using DataHub WebView trend controls,

- Using DataHub scripting (Gamma scripts),

- Using the DataHub OPC UA Historical Access interface,

- Or, you can read the history files directly. The data file format is very simple. Anybody could write a tool to read these files.

Can I access the historical data from a web page?

Yes, as mentioned above, you can access the historical data in WebView screens using the trend control. Also, since the historical data is available in Gamma, it is very simple to write an ASP page using the DataHub web server that implements a web services historical query. Any HTML page could contain historical data, and XmlHttpRequest calls from Javascript could read historical data as well.

The Historian feature is included in any WebView license pack or add-on license, and can also be added to any other license pack.

| Product | Code | Features | ||

|---|---|---|---|---|

| Data Historian | ADDHIS | Historian |